The Difference Between PyTorch clip_grad_value_() and clip_grad_norm_() Functions | James D. McCaffrey

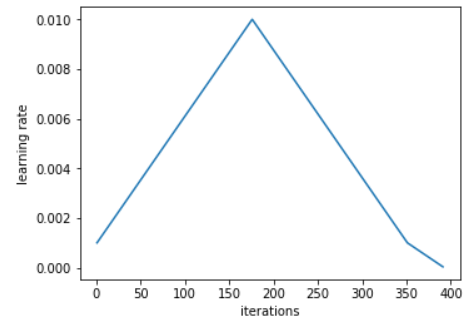

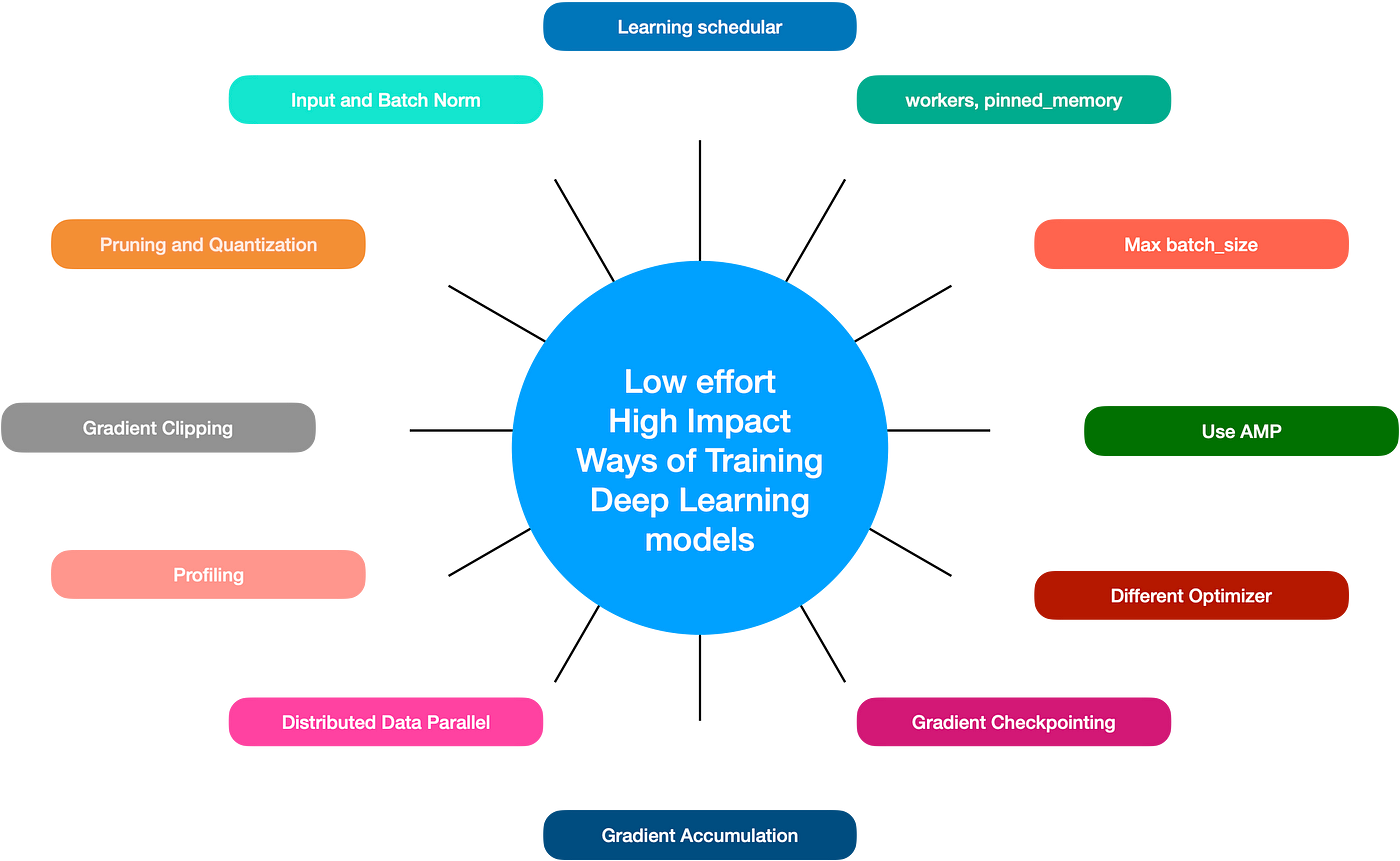

Straightforward yet productive tricks to boost deep learning model training | by Nikhil Verma | Jan, 2023 | Medium

pytorch - How do I implement the 'gradient clipping' in the Neural Replicator Dynamics paper? - Artificial Intelligence Stack Exchange

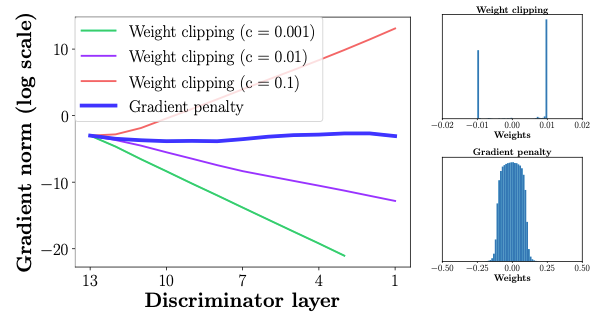

Demystified: Wasserstein GAN with Gradient Penalty(WGAN-GP) | by Aadhithya Sankar | Towards Data Science

GitHub - vballoli/nfnets-pytorch: NFNets and Adaptive Gradient Clipping for SGD implemented in PyTorch. Find explanation at tourdeml.github.io/blog/

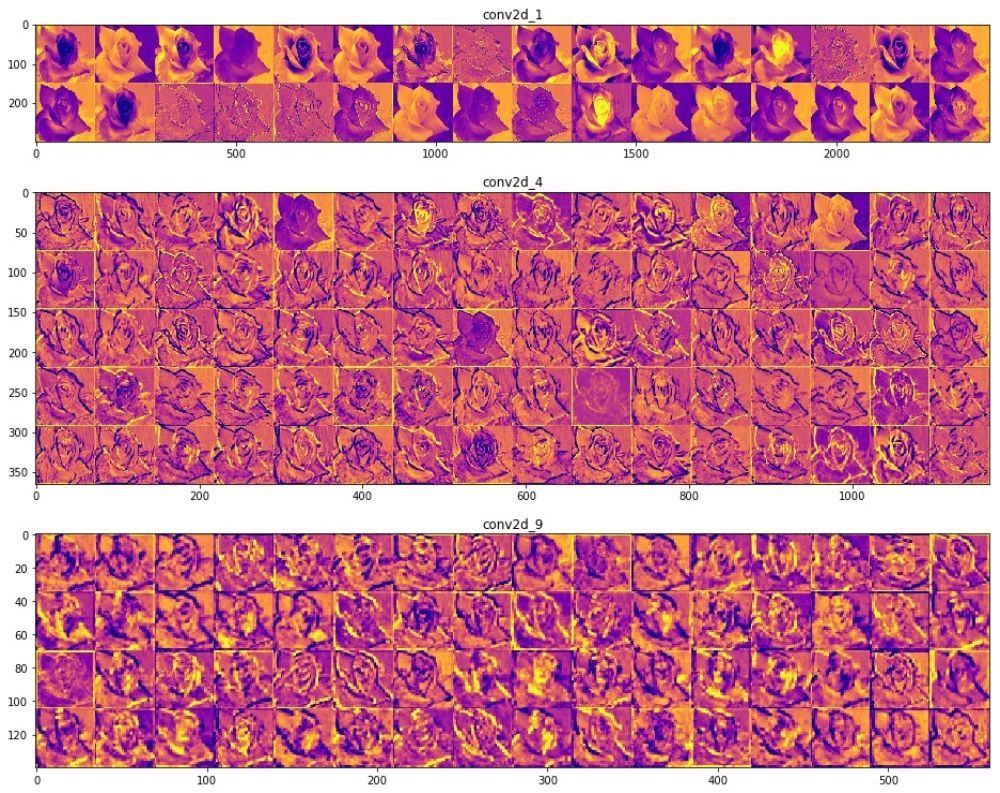

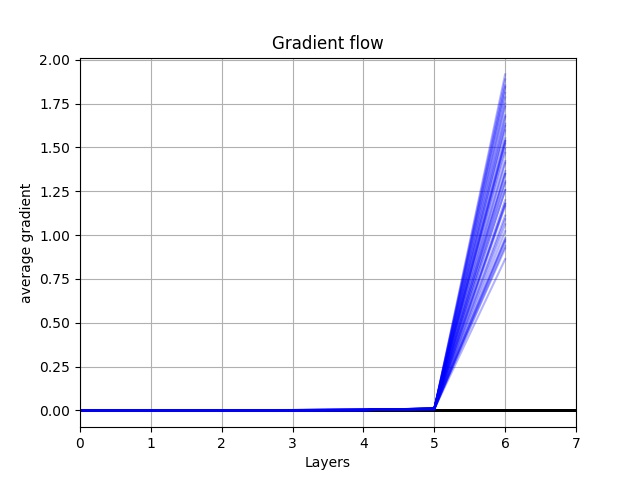

Debugging Neural Networks with PyTorch and W&B Using Gradients and Visualizations on Weights & Biases

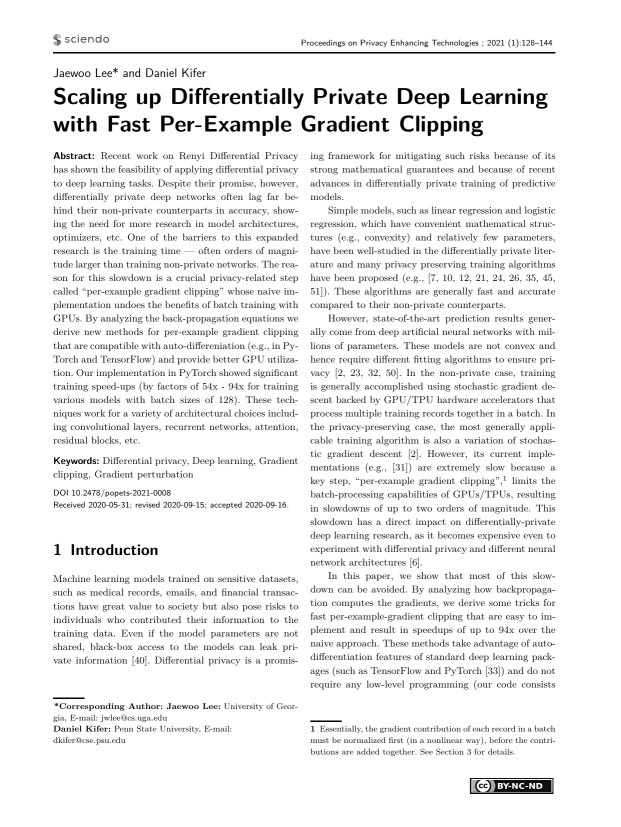

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

![Deep Learning] Gradient clipping 사용하여 loss nan 문제 방지하기 Deep Learning] Gradient clipping 사용하여 loss nan 문제 방지하기](https://blog.kakaocdn.net/dn/bwh7qu/btrbGzYSqq8/Z3tGpoVkERBdBl9Sw1UEB0/img.png)

![Deep Learning] Gradient clipping 사용하여 loss nan 문제 방지하기 Deep Learning] Gradient clipping 사용하여 loss nan 문제 방지하기](https://blog.kakaocdn.net/dn/c0dyAt/btrbGBvBurj/ydramQQ8UWEBR8IuwOjn61/img.png)

![PyTorch] Gradient clipping (그래디언트 클리핑) PyTorch] Gradient clipping (그래디언트 클리핑)](https://blog.kakaocdn.net/dn/bhDouC/btqJnEUH6N4/cHCdmBGndw51Wu8LV9dFt1/img.png)